I found this project called Gallery Project from Turnkey Linux. I am lazy and wanted minimal effort to get this up and running, so I was looking for a turnkey solution that I can just install on my home proxmox server.

STEP 1 - Downloading LXC tar.gz file from Proxmox LXC Template

Click link below to download current version of Gallery Project which is 14.02:

http://mirror.turnkeylinux.org/turnkeylinux/images/proxmox/debian-8-turnkey-gallery_14.2-1_amd64.tar.gz

Click here to see list of other LXC tar.gz files you can download for projects supported by Proxmox.

STEP 2 - Uploading LXC Template to Proxmox

Once you have downloaded the tar.gz LXC template from Step 2, you need to upload it to Proxmox storage as a proxmox template so that you can use it when you are Creating a CT (container) in proxmox. Here are some screenshots of how this is done:

Select 'Container template', select your .tar.gz file, then click Upload

STEP 3 - Create LXC Container

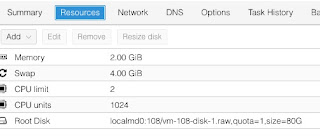

I have used the following settings (yours may be different)

STEP 4 - Start the Gallery container and Login to initialize

- Start the CONSOLE for the container (click on Console > noVNC)

- login as root using the password that you set on Step 3.

- Go through the initial process for Turnkey (make sure to write down your passwords)

Once you are done you should see this summary page:

Take note of the IP address and Port # (you will need it in future)