Last week I attempted to install PfSense as qemu virtual machine in my Proxmox 4.x server. I have an extra NIC with 1Gbps port and I thought it would be cool if I can retire my router and just route everything using PFSense because PFSense as a firewall is awesome (Tons of features).

I have installed it using the following steps, it was easy and I did not experience any issue:

1. Downloaded ISO (AMD64) from

PFsense download page

at the time of this writing the newest stable AMD64 version was: pfSense-CE-2.3.1-RELEASE-amd64.iso

I copied the live-cd ISO to /mnt/{your local storage}/template/iso

(I also included a link to my downloadable QEMU backup image - you can restore within 5 min - see below)

2. Edited my network interface setting at /etc/network/interfaces to add my extra NIC as VMBR1

I added:

allow-hotplug eth1

iface eth1 inet manual

auto vmbr1

iface vmbr1 inet dhcp

bridge_ports eth1

bridge_stp off

bridge_fd 0

3. Created Proxmox VM with the following settings:

CPU Type: 1 socket, 2 cores, default kvm64 (qemu64) did not work for me.

RAM: 512MB

Disk: 8GB, virtio (scsi, qcow2)

Network: Virtio (bridged)

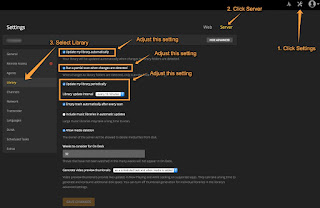

here is a screenshot of my configuration in proxmox ve:

Important: Once PFSense web configurator is running, make sure to go in System > Advance > Networking and disable hardware checksum offload. If you do not do this network packets from LAN to WAN will be SLOW and will not work well.

here is a good read about Virtio network driver for PFSense: https://doc.pfsense.org/index.php/VirtIO_Driver_Support

4. Once PFSense booted I also added the following options in /boot/loader.conf

hint.apic.0.clock=0

kern.hz=100

SLOW and DISAPPOINTING PERFORMANCE

After I got everything running and was able to use this PFSense firewall as my main router, I noticed the CPU utilization was much higher than I expected.

In proxmox, this VM CPU utilization was 15 - 40%

In PFSense dashboard CPU utilization reported 20% - 87%

Then I performed some speed tests... I was disappointed. This PFSense VM performed 40% slower than my Netgear / Asus gigabit router! I have a gigabit internet connectivity, I can get approximately 800Mbps - 920Mbps on my AC Wireless routers. However with this PFSense VM I can only achieve 350 - 480Mbps?!? And when I was doing speedtest (from Speedtest.net) CPU Utilization on PFSense dashboard went up to 87%!!!

Summary

After spending 4 - 5 hours installing and setting up PFSense on Proxmox, I decided not to use it. I am disappointed with its performance! I use PFSense at work (also virtualized inside Proxmox) and I am very happy with it. Maybe the high CPU usage and slow performance I get is due to my J1900 Quad Celeron CPU that I have in my home server?!? Not sure.

Downloadable PFSense QEMU / KVM Image Download

Event though I have decided NOT to use this PFSense VM configuration, I have a completely new and tested working PFSense configuration with default settings.

If you need to get up and running quickly with version PFSense version 2.3.1 for Proxmox QEMU you can download my image here:

https://dl.dropboxusercontent.com/u/32732184/vzdump-qemu-106-2016_06_06-11_33_43-pfsense-231.vma.lzo

Things to do after you 'restored' the image above:

1. set your network and turn them on.

for both net0 and net1 I have set them both to VMBR0 so that you can boot the PFSense immediately. But you probably want to set net1 to VMBR1.

2. I also have set the link to disconnected, link down=1, so you probably want to enable them both to use them.